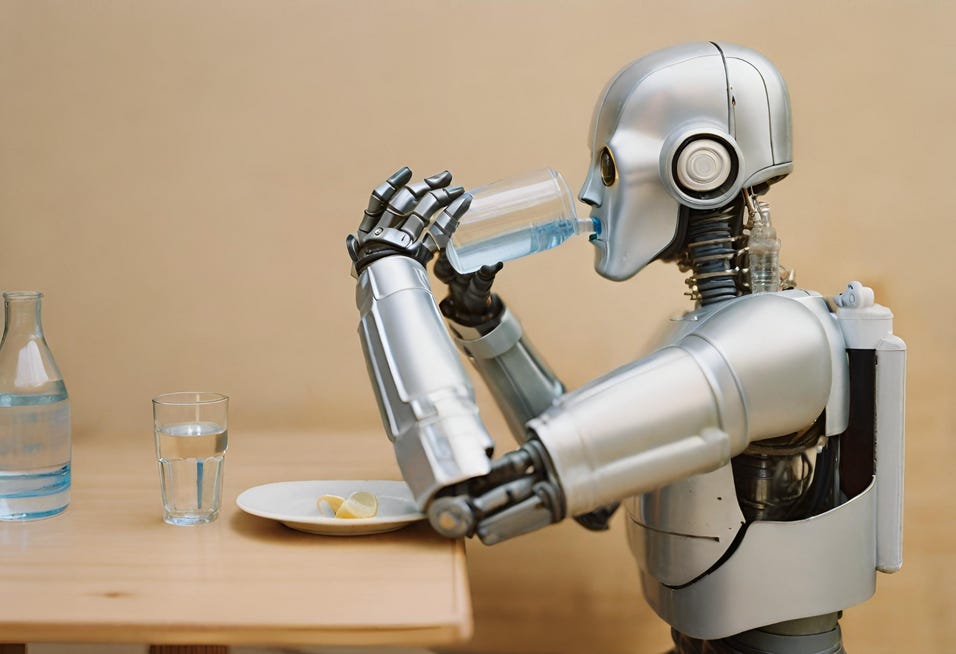

AI has a terrible thirst ...

Exploring the surprising environmental cost of Artificial Intelligence.

It’s funny how some of the greatest polluters are also some of the least visible.

Take Cloud Computing (or the ‘Cloud’) as an example. To the average Joe, it’s as mysterious as it is innocuous. A ‘black box’ service that brings to mind an ephemeral realm of light, fluffiness and clear blue skies. This metaphor couldn’t be further from the truth.

Behind every Cloud there is a silver lining data centre - vast temples of computational power and storage, comprising miles of cabling, fiber optics, computer servers and more. All consuming huge quantities of electricity, water, air, metals, minerals and rare earth elements.

The environmental cost is absolutely staggering. The carbon footprint of the Cloud surpasses that of the airline industry. A single data centre can use the energy equivalent of 50,000 homes (and there are 8000 data centres globally). Annually, data centres collectively devour a staggering 200 terawatt hours (TWh) of energy, more than some nation states. When you include all networked devices (ie. laptops, smartphones and tablets) that make use of the Cloud, it accounts for >2% of global carbon emissions … it really should be called Carbon Computing!

Bitcoin is another silent environmental killer. To mint a bitcoin, you have to first “mine” it. Your computer is tasked with completing complicated equations that, if successfully done, validates and records transactions on the blockchain and rewards you with a newly created bitcoin and transaction fees. This is performed at an industrial scale and utilises high-performance computer chips called GPUs (graphics processing units) - and these GPUs require 10-15 times the energy used by a traditional CPUs (central processing units). As a result, Bitcoin mining uses more electricity than Norway and Ukraine combined.

So, what has this got to do with Artificial Intelligence? Well, AI runs in the Cloud and also uses GPUs (and lots of them); one report estimates that OpenAI's ChatGPT will eventually need over 30,000 GPUs. All of that computing is not just energy hungry; it’s also extremely thirsty.

AI consumes water in two ways – directly and indirectly – and it guzzles the most during the ‘training’ phase: the period in which AI is fed data and programmed how to respond.

Indirect consumption relates to the offsite generation of power used by the data centres. An example of this could be the water used in the cooling towers of coal-fired power stations. Direct consumption relates to the water used on-site by data centres for cooling purposes. Almost all of the power consumed by data centre servers is converted in heat - and this is exacerbated by the sheer size of the units. Heat must be relentlessly abated to keep everything whirring, 24 hours a day, every day. Water is used to cool the equipment and stop it overheating.

According to one estimate, ChatGPT gulps a 500ml bottle of water every time you ask a series of between 5-50 prompts or questions (the range depends on the location of the servers and the centre). The estimate also includes indirect water usage - such as cooling power plants that supply the data centres with electricity.

In its latest environmental report, Microsoft disclosed that its global water consumption spiked 34% from 2021 to 2022 (to nearly 1.7 billion gallons, or more than 2,500 Olympic-sized swimming pools), a sharp increase compared to previous years that outside researchers tie to its AI research.

Solving this problem is not easy, and some of the solutions are as wild as they are innovative - in 2018, Microsoft sunk a data centre off the Scottish coast and proved that underwater data centres are feasible, as well as logistically, environmentally and economically practical. Then there’s the ASCEND (Advanced Space Cloud for European Net zero emissions and Data sovereignty) programme. Their goal is to deploy data centres in Earth’s orbit, to demonstrate that data centres in outer space can substantially reduce their carbon footprint, by utilising solar energy beyond Earth’s atmosphere for power and the freezing vacuum of space for cooling.

Another option is to replace water-intensive and costly cooling processes with non-conductive fluids. These can be applied either via immersion cooling, where servers are submerged for immediate heat removal (see Submer, who are dunking servers in eco-goo to save the planet), or via spray cooling, where the Processer (the hottest component) is directly misted.

Ironically, AI might also be its own cure, whether its manufacturers using AI to design faster, smaller (and more energy efficient) chips, or data centre architects using AI to design and build lean and smart data centres, or data centre operators using AI and robotics solutions to improve energy efficiency and reduce carbon emissions. A Gartner report states that by 2025, half of cloud data centres will deploy advanced robots with AI and ML capabilities, resulting in 30% higher operating efficiency.

These solutions are much needed. Globally, about 4 billion people, or half the world’s population, are exposed to extremely high water stress at least one month a year. There is the potential implication that data centres are consuming water that should be reserved for at-risk communities. In the summer of 2022, Thames Water raised concerns about data centres around London using drinking water for cooling during a drought. This problem is only likely to get worse as the climate crisis deepens and weather patterns become more unpredictable.

As they say, the first step to recovery is acceptance - so it’s great to see the technology majors (Google, Microsoft, OpenAI etc.) have all acknowledged that this growing demand for AI carries a hefty environmental cost. Let’s hope they can apply their considerable creativity, ingenuity and resources to solve the problem!

Till next month …